CODECHECK in Practice: How TU Delft and 4TU.ResearchData Are Making Reproducibility Happen

How can we make reproducibility a routine part of the publishing process? That question is at the heart of a new pilot bringing together the TU Delft Digital Competence Centre (DCC) and the 4TU.ResearchData repository with a simple goal: offer researchers a simple way to verify the reproducibility of their research software.

What Is CODECHECK?

CODECHECK is a community-led initiative that helps verify the computational reproducibility of scientific research. A volunteer known as a codechecker runs the author’s code to reproduce the results of a publication. The goal is not to validate scientific correctness, or verify quality of code, but to confirm that the code actually works.

When successful, a CODECHECK Certificate is issued. It documents what was checked, who did the checking, and what steps were taken. The certificate is published in Zenodo and can be linked to the original publication or dataset.

This transparent process increases trust, improves documentation, and supports Open Science principles.

From ‘Little Demand’ to a Working Pilot

Building on the momentum of the CODECHECKing Goes NL project, the TU Delft DCC took the initiative, in autumn 2024, of providing CODECHECK as a service to TU Delft researchers. But very soon they faced a familiar problem: few researchers requested such reproducibility checks. Meanwhile, 4TU.ResearchData was already hosting research software and data outputs – but lacked the capacity to verify reproducibility.

The solution? Combine strengths.The 4TU.ResearchData repository became the entry point; the TU Delft DCC, the service provider.

How It Works

The workflow is straightforward:

- A researcher publishes software in 4TU.ResearchData.

- The author is invited to participate in a CODECHECK.

- If interested, they share the relevant data and the corresponding article or preprint.

- A TU Delft codechecker attempts to run the code. If needed, the author is contacted to clarify setup or fix issues.

- Once results are reproduced (note: not validated for scientific correctness), a Certificate is issued.

- The certificate is linked to the dataset landing page and marked with a “Code Works” badge.

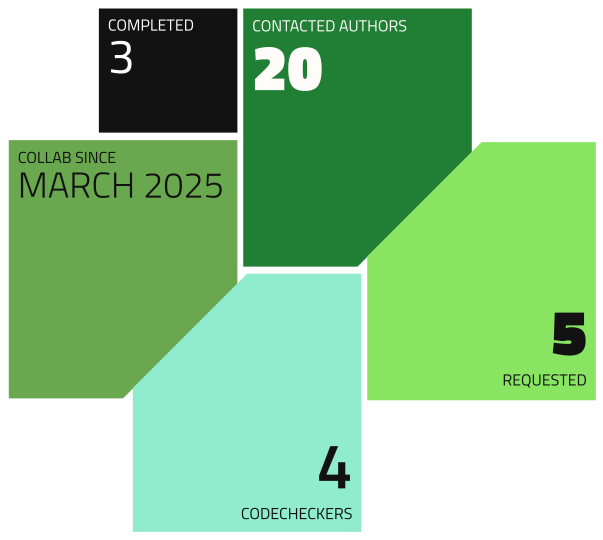

Collaboration in Numbers (as of June 2025)

CODECHECKed publications

So far, three software publications hosted on 4TU.ResearchData have successfully passed reproducibility checks and earned a shiny “CODE WORKS” badge. This badge signals that the code runs as expected and produces the published results — a big step toward transparency and reusability.

Congratulations to the authors for making their work more reproducible and FAIR!

- https://data.4tu.nl/datasets/e64c61d3-deb5-4aad-af60-92d92755781f/3

- https://data.4tu.nl/datasets/e06f14b2-d884-4d1a-88fd-4ee8ebc3a98e/1

- https://data.4tu.nl/datasets/2c221b54-a20b-4659-99d2-af4a9a114b60/2

In their own words

Reproducibility is more than a technical step — it’s a collaborative process. Here’s how participants experienced the CODECHECK pilot in their own words:

“I think reproducibility is extremely important: If others cannot reproduce your results, the scientific value is simply very low. You can explain the method in your paper, of course, but what is code if not the most detailed explanation possible? I think that if you write code for your paper, you have the scientific obligation to share it. And so I did.”

— [Florine, PhD Candidate ]

What’s Next?

The pilot is already yielding insights – and enthusiasm. The next step is to extend the model to all 4TU partner institutions, with a scalable workflow, onboarding materials, and training opportunities for codecheckers. The goal: make reproducibility checks a standard option alongside software publication, not an afterthought.

Want to get involved?

Would you like to contribute to more reproducible, trustworthy science?

- Do you have a publication or software you’d like to get CODECHECKed?

We’re happy to explore whether your project is a good fit for the pilot. All you need is a published dataset, script or notebook, and a published or preprint article. - Are you curious about becoming a codechecker yourself?

If you enjoy running other people’s code, spotting reproducibility pitfalls, or improving open research workflows — we’d love to hear from you!

Get in touch:

Read more about the TU Delft DCC Reproducibility Check service or contact 4TU.ResearchData directly if you are interested in CODECHECK as an author of publication.

Let’s make reproducibility a common practice — one check at a time.

“Parts of this post were drafted with the help of AI (ChatGPT) and edited by the author.”

Written by Aleksandra Wilczynska